SIGGRAPH, 2020

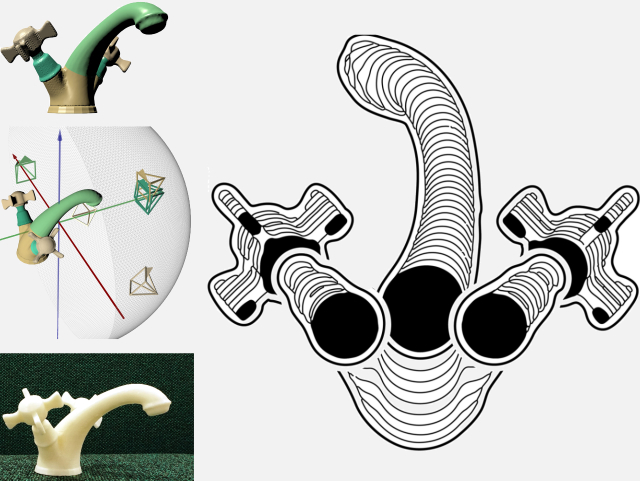

Tactile Line Drawings for Improved Shape Understanding in Blind and visually impaired users

A. Panotopoulou,

X. Zhang,

T. Qiu,

X.D. Yang,

E. Whiting

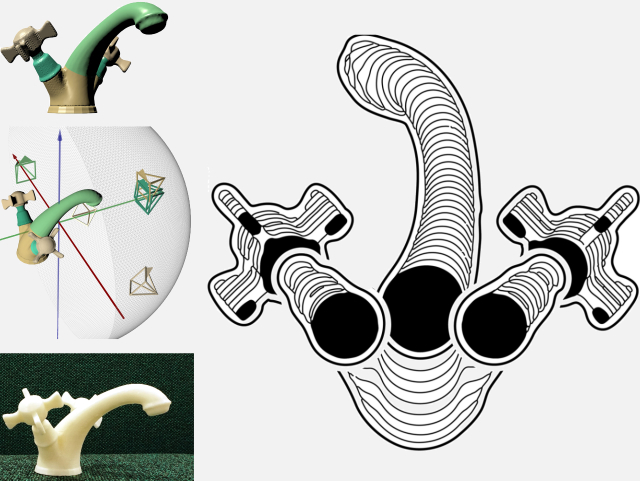

Members of the blind and visually impaired community rely heavily on tactile illustrations - raised line graphics on paper that are felt by hand - to understand geometric ideas in school textbooks, depict a story in children's books, or conceptualize exhibits in museums. However, these illustrations often fail to achieve their goals, in large part due to the lack of understanding in how 3D shapes can be represented in 2D projections. This paper describes a new technique to design tactile illustrations considering the needs of blind individuals. Successful illustration design of 3D objects presupposes identification and combination of important information in topology and geometry. We propose a twofold approach to improve shape understanding. First, we introduce a part-based multi-projection rendering strategy to display geometric information of 3D shapes, making use of canonical viewpoints and removing reliance on traditional perspective projections. Second, curvature information is extracted from cross sections and embedded as textures in our illustrations.

Eurographics, 2018

Watercolor Woodblock Printing with Image Analysis

A. Panotopoulou, S. Paris, E. Whiting

Abstract Watercolor paintings have a unique look that mixes subtle color gradients and sophisticated diffusion patterns. This makes them immediately recognizable and gives them a unique appeal. Creating such paintings requires advanced skills that are beyond the reach of most people. Even for trained artists, producing several copies of a painting is a tedious task. One can resort to scanning an existing painting and printing replicas, but these are all identical and have lost an essential characteristic of a painting, its uniqueness. We address these two issues with a technique to fabricate woodblocks that we later use to create watercolor prints. The woodblocks can be reused to produce multiple copies but each print is unique due to the physical process that we introduce. We also design an image processing pipeline that helps users to create the woodblocks and describe a protocol that produces prints by carefully controlling the interplay between the paper, ink pigments, and water so that the final piece depicts the desired scene while exhibiting the distinctive features of watercolor. Our technique enables anyone with the resources to produce watercolor prints.

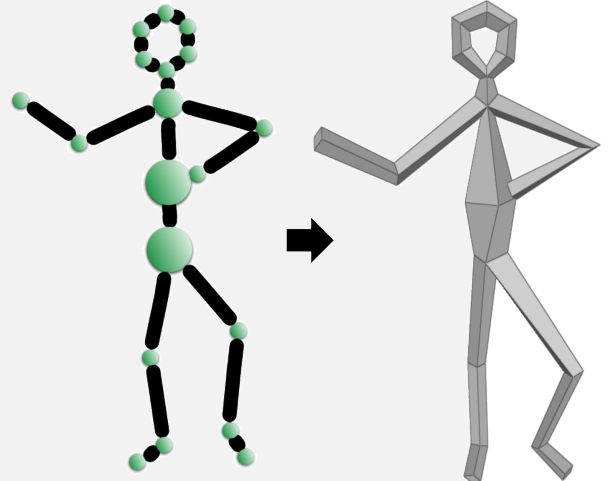

Women in Mathematics, 2018

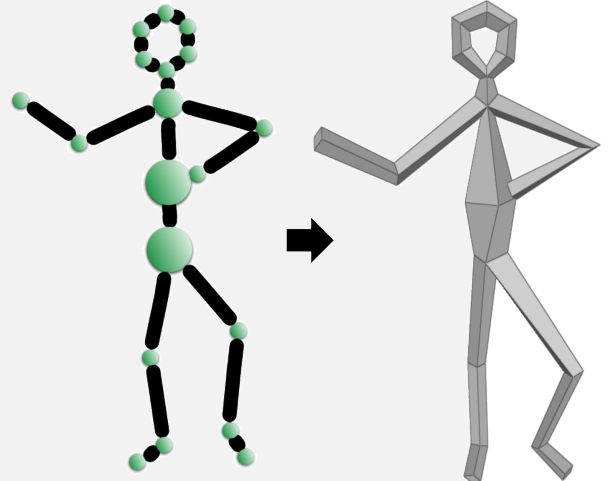

Scaffolding a Skeleton

A. Panotopoulou, E. Ross, K. Welker, E. Hubert, G. Morin

The goal of this paper is to construct a quadrilateral mesh around a one-dimensional skeleton that is as coarse as possible, the “scaffold.” A skeleton allows one to quickly describe a shape, in particular a complex shape of high genus. The constructed scaffold is then a potential support for the surface representation: it provides a topology for the mesh, a domain for parametric representation (a quad-mesh is ideal for tensor product splines), or, together with the skeleton, a grid support on which to project an implicit surface that is naturally defined by the skeleton through convolution. We provide a constructive algorithm to derive a quad-mesh scaffold with topologically regular cross-sections (which are also quads) and no T-junctions. We show that this construction is optimal in the sense that no coarser quad-mesh with topologically regular cross-sections may be constructed. Finally, we apply an existing rotation minimization algorithm along the skeleton branches, which produces a mesh with a natural edge flow along the shape.

Siggraph Asia, 2016

Interactive Resonance Simulation for Free-form Print-wind Instrument Design

N. Umetani, A. Panotopoulou, R. Schmidt, E. Whiting

This paper presents an interactive design interface for three-dimensional free-form

musical wind instruments. The sound of a wind instrument is governed by the acoustic

resonance as a result of complicated interactions of sound waves and internal geometries

of the instrument. Thus, creating an original free-form wind instrument by manual

methods is a challenging problem. Our interface provides interactive sound simulation

feedback as the user edits, allowing exploration of original wind instrument designs.

Sound simulation of a 3D wind musical instrument is known to be computationally expensive.

To overcome this problem, we first model the wind instruments as a passive resonator,

where we ignore coupled oscillation excitation from the mouthpiece. Then we present

a novel efficient method to estimate the resonance frequency based on the boundary

element method by formulating the resonance problem as a minimum eigenvalue problem.

Furthermore, we can efficiently compute an approximate resonance frequency using a

new technique based on a generalized eigenvalue problem. The designs can be fabricated

using a 3D printer, thus we call the results "print-wind instruments" in association

with woodwind instruments. We demonstrate our approach with examples of unconventional

shapes performing familiar songs.

Siggraph Asia, 2015

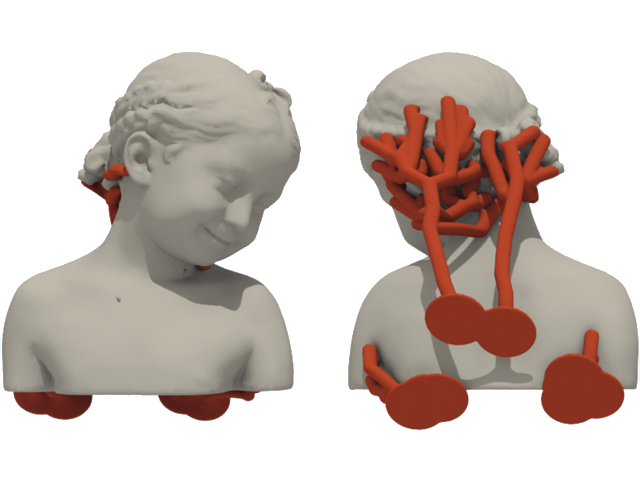

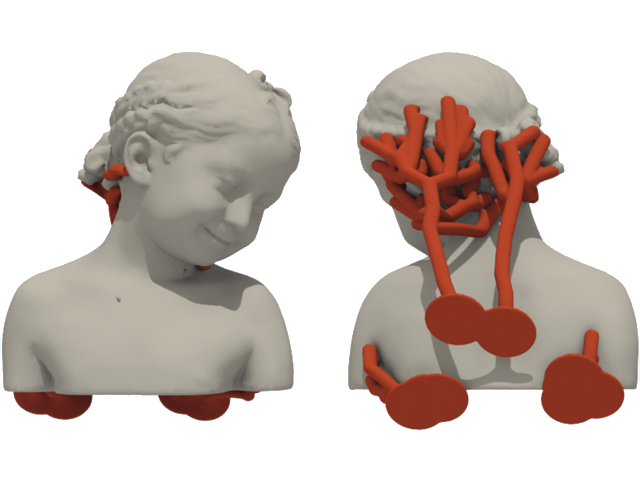

Perceptual Models of Preference in 3D Printing Direction

X. Zhang, X. Le, A. Panotopoulou, E. Whiting, C. Wang

This paper introduces a perceptual model for determining 3D printing orientations. Additive manufacturing methods involving low-cost 3D printers often require robust branching support structures to prevent material collapse at overhangs. Although the designed shape can successfully be made by adding supports, residual material remains at the contact points after the supports have been removed, resulting in unsightly surface artifacts. Moreover, fine surface details on the fabricated model can easily be damaged while removing supports. To prevent the visual impact of these artifacts, we present a method to find printing directions that avoid placing supports in perceptually significant regions. Our model for preference in 3D printing direction is formulated as a combination of metrics including area of support, visual saliency, preferred viewpoint and smoothness preservation. We develop a training-and-learning methodology to obtain a closed-form solution for our perceptual model and perform a large-scale study. We demonstrate the performance of this perceptual model on both natural and man-made objects.

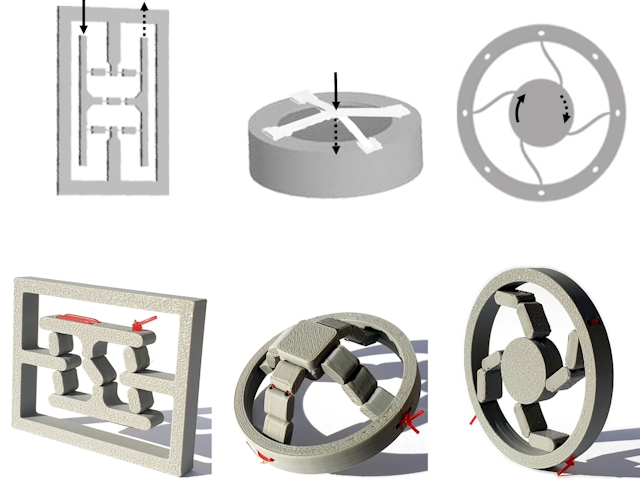

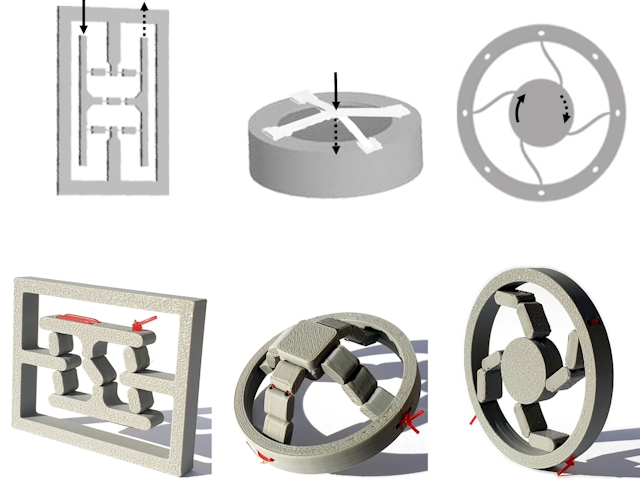

Symposium on Computational Fabrication, 2024

Threa-D Printing Tunable Bistable Mechanisms

A. Panotopoulou, V. Savage, D. L. Ashbrook

Nowadays, with 3D-printers becoming more accessible, hobbyists aim to create artifacts with integrated functional mechanisms. Many bistable mechanisms could be integrated into a variety of objects; however, adjusting their designs for different applications, when only low-cost hobbyist printers and commonly used filaments are available, is challenging. They include thin elements, which complicate their adoption and cannot be easily tuned post-fabrication. We adapt existing designs, using elastic threads and common filaments. Unlike the original designs, the designs we showcase are robust even though they are made with low-cost 3D-printers.

Ongoing.

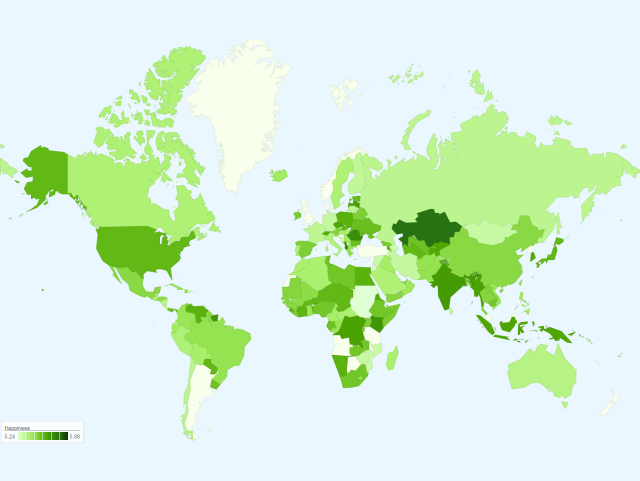

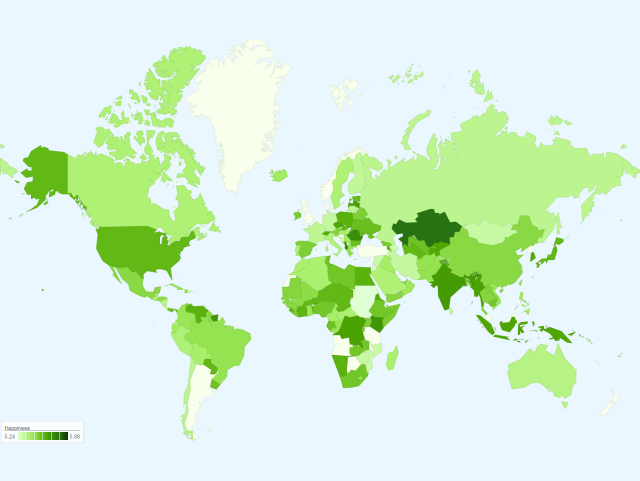

Dartmouth CS Colloquium, 2013

Sentiment Analysis of Constitutions

A. Panotopoulou, N. Foti, D. Rockmore

Sentiment analysis refers to the use of natural language processing to identify the polarity of a document. In our work, we use a dataset of sentimentally ranked commonly used words (labMT) and a dataset of about 500 constitutional preambles of 171 countries; we focus on the preambles since most often they are not a legally operative part of the constitution. We apply an existing method and on a novel task, we quantitatively measure the happiness of constitutions. Specifically, the input is a constitutional preamble and the output is a score ranging from 1 to 9 which represents the happiness of the input text, with 9 representing the extreme positive and 1 the extreme negative sentiment.

This work was later published in [Ginsburg et al., 2013] as shown in the citation.

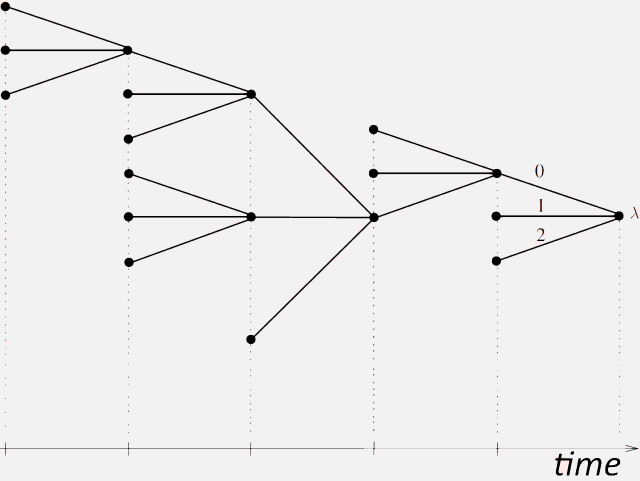

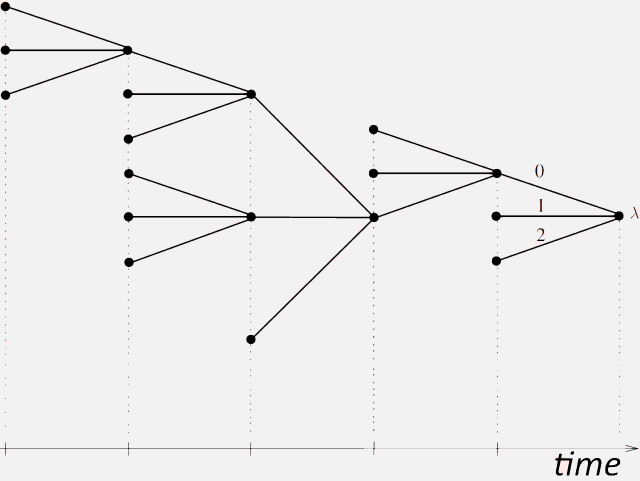

Information Theory Workshop, 2012

Bayesian Inference for Discrete Time Series via Tree Weighting

I. Kontoyiannis, A. Panotopoulou, M. Skoularidou

Inferring temporal dependencies in discrete time series data (such as, pitch in a bird-song, words in a text, or daily price of a stock) is of importance to many areas. Some of these dependencies can be modeled as variable-length Markov chains (tree models), but their computation is considered a time and memory intensive task. We develop a linear-time algorithm for the computation of the most likely model that fits the input data. Our approach is a generalization of the Context Tree Weighting Method [Willems et al.], we then extend it and prove that it can be used to obtain not only the first but the k most likely tree models. Finally, we test these algorithms on a variety of synthetic datasets.

This work was published in [Mertzanis et al., 2018] and [Kontoyiannis et al., 2020] as shown in the citations.